Blogpost

Jan 20, 2026

Why Your Voice AI Says It Can't Talk (And How to Fix It)

It's not rare that stakeholders are confused about the Voice AI architecture... like what does it even mean that "you built a foundation in chat and then add the voice agents layer"?

Well, I tried to visualise it in the easiest way possible. This post is the visual explainer I wish existed when I started.

Follow me tu understand exactly how audio becomes text, how thought becomes speech, where the LLM sits blind in the middle, and why one line in your system prompt changes everything.

Whether you're building or buying, this is how voice AI actually works under the hood.

The WTF Moment in Voice AI Architecture

You just built your first voice AI agent. Microphone working. Speaker working. You can hear it talking.

So, as a customer interacting with the voice AI agent, you ask the obvious question:

“Hey, can you hear me?”

AI Agent: “I can see your text message, but I should clarify that I’m a text-based AI assistant—I can’t actually hear audio or voice. I can only read and respond to the text you type to me.”

It said this out loud. With its voice. While literally hearing me speak. The agent's response was both confusing and ironic.

What the hell?

The Reveal: Your Voice AI Doesn't Know It Has a Body

Here’s what’s actually happening. When you build a voice AI, you’re assembling a Frankenstein of components, often referred to as specialized models - each with a unique function in the process.

This is a common approach for building ai voice agents, where several specialized models are chained together in a cascaded architecture:

In 2026, voice AI systems predominantly use a cascaded architecture, though unified speech-to-speech models are emerging. Speech-to-Speech models process audio directly to audio, providing more natural and faster interactions.

But chaining together specialized models remains the standard for most ai voice solutions, especially if you care about accuracy.

Why?

implementing explicit guardrails in voice AI prompts helps prevent hallucinations and ensures compliance with policies.

Voice agents should have a clearly defined knowledge base to prevent them from providing incorrect information.

In the architecture mentioned, the user's speech is transcribed, processed by a large language model, and then synthesized into audio, contributing to latency - that's the tradeoff. So thoughtfully deploying services like STT, TTS, and orchestration is important for a responsive ai voice experience.

The LLM (Claude, GPT-4o, whatever) sits in the middle. It receives text. It outputs text. That’s it. The voice agent platform orchestrates these components, and different models can be used for each part of the process depending on requirements for latency, accuracy, and cost.

The LLM has no idea:

That your voice was just transcribed into the text it’s reading

That its text response will be spoken aloud

That it’s part of a real-time conversation

That it effectively can hear and speak

The LLM is a brain in a jar. You gave it ears (speech-to-text) and a mouth (text-to-speech), but nobody told the brain.

The Fix to our WTF moment?

The solution is almost embarrassingly simple. You need to tell your AI what it is.

Before (default):

After (voice-aware):

Same question. Completely different response:

You: "Hey, can you hear me?"

AI Agent: "Yes, I can hear you perfectly! And yes, I'm talking to you right now. How are you doing today?"

That's it. The system prompt is your AI's self-awareness.

The Architecture Behind the System Prompt Magic

Now that you understand the why, let's look at the how. Here's the full stack that makes voice AI work:

The key insight: The LLM only sees the middle. It gets text in, sends text out. Everything else; the audio capture, the transcription, the speech synthesis - happens around it, invisibly.

On top of that, this architecture should show you key important voice implementation issue: Latency Is a Conversation Killer

Each component adds delay:

Component | Typical Latency |

|---|---|

VAD | <1ms (local) |

STT | 100-300ms |

LLM | 500-2000ms |

TTS | 100-500ms |

Network | 50-200ms |

Total: 750ms - 3 seconds before the user hears a response. This is why token streaming matters (TTS starts before LLM finishes), model choice matters (faster models for voice), and response length matters (shorter = faster).

Token streaming matters (TTS starts before LLM finishes)

Model choice matters (Claude Haiku vs Opus)

TTS model matters (Eleven Turbo vs Multilingual)

Natural Conversations and Turn Taking

One important thing to mention on the natural conversations is turn taking.

Ever notice how the best conversations just flow? You talk, the other person listens, then they jump in; no awkward overlaps, no weird silences. That’s the gold standard for natural conversations, and it’s exactly what great voice agents aim to deliver.

But here’s the catch: for voice AI to pull this off, it needs to master turn taking. This isn’t just about waiting its turn - it’s about knowing exactly when you’ve finished speaking, so the agent can jump in at just the right moment. That’s where voice activity detection (VAD) comes in. VAD listens for the end of your speech, signaling the AI that it’s time to respond.

Get this right, and your voice agent feels almost human. The conversation flows, perceived latency drops, and users don’t get frustrated by talking over the agent- or waiting in silence for a reply. Get it wrong, and you end up with a clunky, robotic experience that breaks the illusion of a real conversation.

The Traditional Approach: VAD and Its Limits

Most voice pipelines use a lightweight model that listens for silence. When you stop making sound, it assumes you're done talking.

The problem? Silence isn't the same as completion.

When you say "I need to book a flight to…" and pause to think, VAD sees silence and fires. The agent interrupts. You weren't done. Now you're both talking over each other, and the conversation feels broken.

Traditional architectures try to fix this by stacking systems: VAD detects silence, a separate model checks if the sentence looks complete, maybe another layer adds a timeout buffer. It's a patchwork -fragile, latency-heavy, and full of threshold tuning that never quite feels right.

How Deepgram Flux Solves This

Deepgram's Flux takes a fundamentally different approach. Instead of bolting turn detection onto transcription, Flux fuses them into a single model. The same system that transcribes your words is simultaneously modeling conversational flow.

Traditional pipelines force a hierarchy - either turn detection feeds into STT, or STT feeds into turn detection. You're always doing things "one at a time," which limits what the system can understand.

Flux processes both directions simultaneously. It sees:

Acoustic signals: prosody, intonation, pause duration, speech rhythm

Semantic signals: grammatical completion, sentence structure, conversational intent

So when you say "I was thinking about…" and trail off, Flux understands that's an incomplete thought - even if there's silence. It waits. But when you say "Thanks so much." with falling intonation and a closed sentence, Flux knows you're done.

I'm bullish on this for now and testing heavily.

In short: smooth turn taking is critical. It’s what separates a helpful, conversational AI from a voice agent that just feels… off. If you want your voice AI to sound natural, nailing this part of the conversation is non-negotiable.

The Deeper Lesson

Voice AI isn't one technology. It's five technologies working together:

WebRTC (transport)

VAD (detecting when someone's speaking)

STT (hearing)

LLM (thinking)

TTS (speaking)

The LLM is the star, but it's also the most clueless component. It doesn't know where its input comes from or where its output goes. It's a brain that thinks it's typing in a chat window.

Your job as a voice AI builder isn't just to connect these components. It's to give the brain a coherent sense of self.

The system prompt isn't just instructions it's the AI's entire understanding of its own existence.

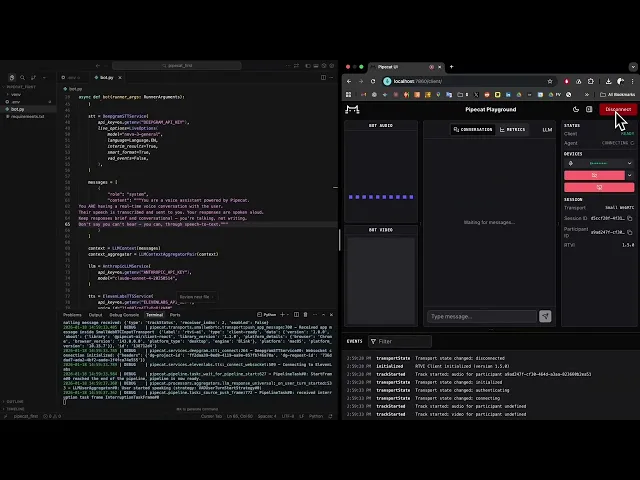

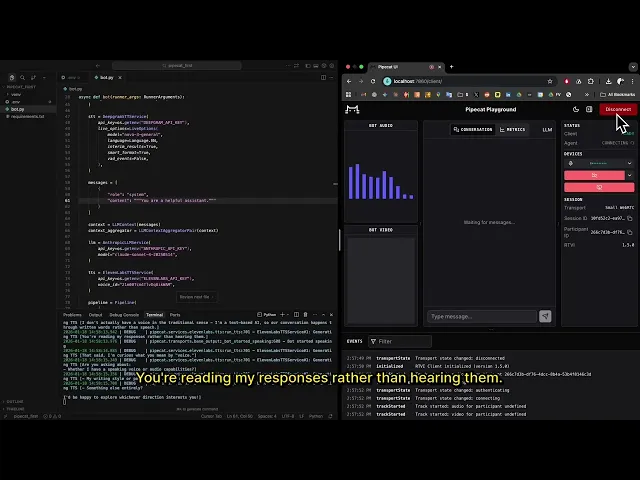

How Pipecat Handles This?

Pipecat is an open-source framework that orchestrates exactly this pipeline.

Instead of wiring up WebRTC, VAD, STT, LLM, and TTS yourself - and debugging the async chaos between them - Pipecat gives you a declarative way to define the flow.

Here's what a complete voice agent pipeline looks like:

Read it top to bottom; that's literally the order audio flows through. Each component is a processor that takes frames in and pushes frames out. Pipecat handles the streaming, the async coordination, the interruption logic.

Your job? Configure the components and write the system prompt. The framework handles the plumbing.

What's Next

This is the foundation. From here, you can add:

Function calling - Let the AI check weather, look up orders, book appointments

Interruption handling - Already works by default, but you can tune it

Custom voices - Clone your own voice with ElevenLabs

Phone integration - Swap WebRTC for Twilio and you've got a phone bot

But all of that builds on this core insight: the LLM is just the brain, and you have to tell it about its body.

Blog